Artificial intelligence has reshaped how brands, creators, and businesses approach content. Blog posts, product descriptions, and marketing campaigns are often drafted with the help of tools like ChatGPT or Gemini. While this has improved efficiency, it also raises questions about trust, transparency, and search engine visibility. As such, the ability to detect AI writing is no longer a niche skill but a crucial element of SEO strategy.

For SEO professionals, the stakes are high. If content fails to meet quality standards, it risks being flagged as low-value by search systems, even if it sounds polished on the surface. That’s why marketers and business owners need to understand the strengths and the weaknesses of generative AI, along with the ways to check if content passes human and algorithmic scrutiny.

This article breaks down what AI-generated content is, why identifying it matters, the tools available to spot it, and how to use advanced digital tools responsibly in SEO. Moreover, you’ll also learn practical techniques to evaluate text quality and align your content with Google’s guidelines.

What is AI-generated content?

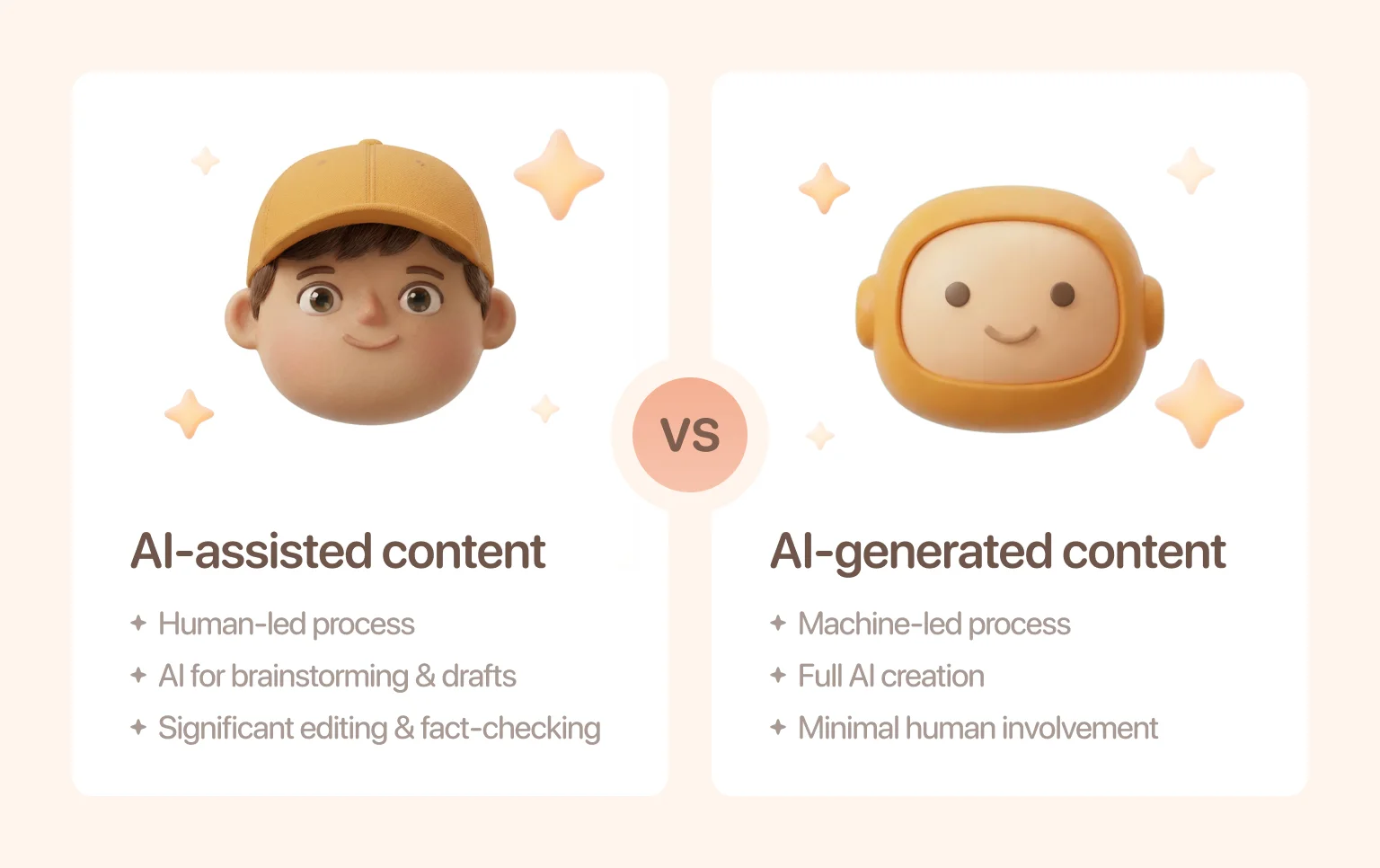

Such content refers to text, images, or multimedia created with machine learning models instead of being written entirely by humans. Within this scope, it’s important to draw a line between AI-assisted and AI-generated work. And that’s exactly what comes next.

- AI-assisted content. A writer uses AI for brainstorming, outlines, or first drafts but applies significant editing, personalization, and fact-checking.

- AI-generated content. Entire pieces are produced by AI with minimal or no human involvement.

In marketing, both approaches show up in everyday tasks such as:

- Drafting blog articles for keyword targeting

- Generating product descriptions at scale

- Creating social media captions or ad copy

- Producing FAQs, summaries, or listicles for quick turnaround

The convenience is undeniable, yet it raises questions around originality, authenticity, and long-term SEO performance. Readers may not notice immediately, but search engines are becoming increasingly adept at identifying patterns and assessing whether text demonstrates genuine expertise.

“AI can draft thousands of words in minutes, but only human oversight ensures they add value.”

Why detecting AI content matters for SEO

The link between generative AI and SEO is complex. On one hand, AI helps teams produce content faster. On the other hand, it introduces risks if the output doesn’t align with search engine expectations. Knowing how to check for AI-writing allows brands to safeguard rankings and credibility.

Google’s stance on AI content

Google has repeatedly stated that it doesn’t penalize AI content just because of its origin. Instead, it judges quality. According to Google’s Helpful Content Updates, pages that demonstrate experience, expertise, authoritativeness, and trustworthiness (E-E-A-T) are rewarded. The focus is always on usefulness, not the method of creation.

This means you can safely use AI in your workflow, provided the end result feels people-first. If your articles answer questions thoroughly, cite reliable sources, and avoid thin or repetitive text, they can perform well regardless of whether AI drafted the first version.

Impact on E-E-A-T

E-E-A-T has become a critical benchmark. However, AI on its own cannot demonstrate real-world experience or professional credibility. That’s where human input is irreplaceable. For instance, a blog post written purely by AI might explain a concept, but it often lacks the nuanced insights, examples, or first-hand case studies that make readers trust the source.

That’s why brands must integrate expert voices, bylines, and contextual knowledge. Adding commentary from professionals, citing field experience, and showing transparency are all signals that strengthen both reader trust and algorithmic evaluation.

Risk of penalties, content deindexing, or ranking drops

Google doesn’t directly penalize content just because it was generated with AI. However, there are indirect risks that can harm a site’s performance over time. Articles overloaded with generic phrases or filled with excessive keywords may trigger spam signals, leading to lower rankings.

Moreover, if a domain develops a reputation for shallow, repetitive, or unoriginal material, search engines may gradually downgrade its authority. This can result in reduced visibility, suppressed rankings, or, in extreme cases, deindexing of pages due to perceived lack of value, and a loss of trust from both algorithms and human readers.

Reputation and trust signals from both readers and algorithms

Beyond rankings, brand reputation is on the line. Readers today are more sensitive to authenticity. If content feels robotic, repetitive, or impersonal, engagement drops. On the algorithm side, signals such as bounce rate and dwell time feed back into search visibility.

When users stay longer, share posts, and interact with links, it tells Google the content is valuable. When they exit quickly, it suggests the opposite. AI without human oversight risks producing the kind of text that fails this test. Our SEO consulting services help ensure your content stays engaging and aligned with what both users and search engines expect.

How AI-generated content can be identified

Spotting whether a piece of text was produced by a machine often comes down to recognizing patterns. Even though AI tools are becoming more advanced, they still leave behind certain traces that attentive readers or editors can catch. Keep an eye out for the following patterns:

- Uniform sentence structure. AI tends to generate sentences with a similar rhythm and length. This lack of variation makes the text feel flat or mechanical, whereas human writers naturally mix short and long sentences for flow and emphasis.

- Surface-level detail. Many AI outputs provide summaries that sound accurate but stop short of adding depth. They rarely include personal stories, case studies, or first-hand insights that show experience and context.

- Predictable flow. The way AI organizes content often feels too linear and formulaic. Instead of surprising the reader with a new angle or nuanced transition, it sticks to safe patterns that can come across as repetitive.

- Overuse of filler words. To meet a word count, AI may recycle generic phrases or stock expressions that don’t add real value. This padding can make the text sound polished at first glance, but shallow upon closer reading.

If you’re wondering how to tell if something is written by AI, start by asking whether the text demonstrates genuine thought, originality, and lived experience. Human writing often carries imperfections, subtle humor, or a unique turn of phrase — qualities AI still struggles to reproduce convincingly.

Tools to detect AI-generated content

Technology has kept pace with the spread of generative AI, and several platforms now offer detection services. These tools scan text for signs of automation, but their accuracy varies.

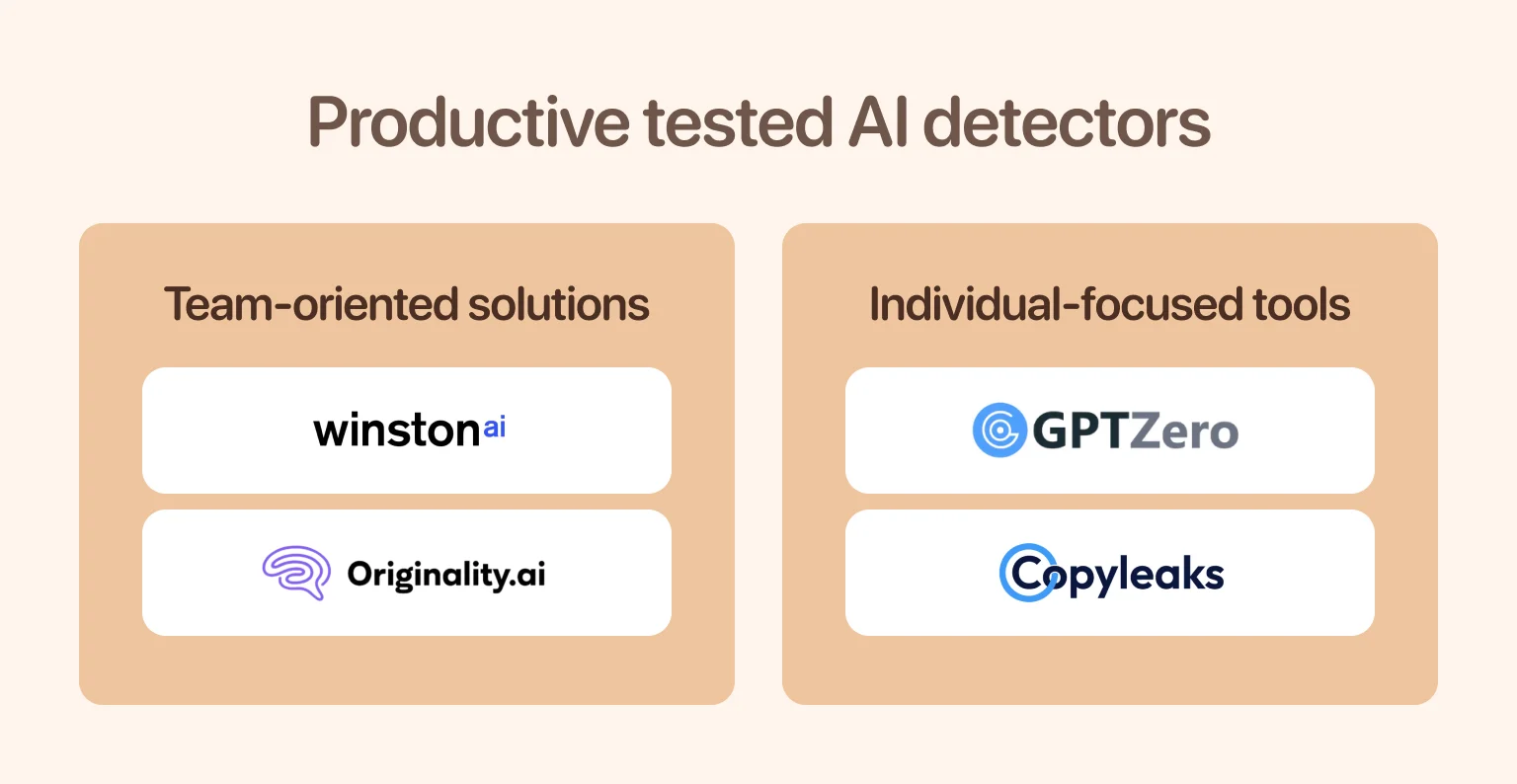

Pros and cons of the best AI detection tools

Here’s a rundown of the most prominent options and their key features.

- GPTZero. Designed for education, its strength is a straightforward interface that makes it easy for students and teachers to use. On the downside, it can produce false positives, especially with polished human essays that may be flagged as AI-written.

- Winston AI. Aimed at SEO and publishing, its advantage is the clear reporting system and integration into editorial workflows, which helps content teams manage output effectively. However, the subscription fees can become expensive for smaller teams with limited budgets.

- Copyleaks. Originally a plagiarism checker, it has expanded into AI detection. Its strength is strong integration with learning management systems, making it a natural fit for schools. The weakness is that it sometimes struggles to tell the difference between text written by humans and text lightly edited with AI.

- Originality.AI. Widely used in digital marketing, particularly by agencies, its advantage is a dashboard that allows managers to oversee multiple writers and projects at once. The weakness, however, is inconsistent scoring across versions, which can reduce confidence in its results.

Each of these platforms helps you identify AI-generated text, but none guarantees 100% accuracy, particularly when analyzing non-native English writing or SEO-optimized content.

How these tools work

Most detection tools rely on classifiers trained to recognize the statistical signatures of generative models. They measure factors like perplexity (predictability of words) and burstiness (variation in sentence length). The final output is typically a confidence score rather than a simple yes-or-no verdict.

“The takeaway is clear: tools can help, but they should never replace human judgment.”

Manual techniques for spotting AI-written text

Even without specialized tools, editors can often check for AI-writing by paying close attention to how the text feels and flows. One of the most reliable methods is to compare it against the writer’s past work. A sudden shift in tone, vocabulary, or sentence rhythm can suggest that the draft may not have been written in the same voice.

Another clue comes from the presence of vague claims that lack evidence or context. When a statement feels like it was pulled from a template rather than grounded in research or personal insight, it raises questions. The same goes for examples: authentic writing usually carries specific details or stories, while AI-driven text often falls back on generic illustrations.

Depth of analysis is another key marker. Machine-generated content frequently summarizes information but avoids taking strong positions or offering unique conclusions. Finally, readers can often sense authenticity in voice. An article might appear polished and grammatically sound, yet still feel lacking in substance. If a piece reads smoothly but leaves the impression of being detached or impersonal, it’s worth asking, “Did AI write this?” and taking a closer look.

Limitations of AI detection

AI detection has improved quickly. It still has blind spots that matter for editors, educators, and SEO teams. Knowing those limits helps you decide when to trust a score and when to lean on your own review. If you ever wonder how AI can be detected, it helps to understand where detectors fall short and where human judgment fills the gap.

False positives and false negatives

Detectors rely on statistical signals. They are helpful yet imperfect. These deficiencies primarily manifest in two ways:

- False positives happen when polished human text looks machine-like. This is common with formal essays and grammar-checked copy.

- False negatives appear when AI is edited by a human or tuned to produce higher variation. Hybrids can slip through checks even if large parts began as machine output.

Short passages also reduce accuracy. Most tools perform best on longer samples.

Advanced prompting that mimics human tone

Writers can guide models to vary sentence length, inject light humor, or reference public facts. Careful prompting makes outputs feel more personal. That narrows the gaps detectors rely on. If your team often asks, “Is this written by AI?”, assume that smart prompting can blur the signals you expect to see.

The rise of AI-human hybrid content

Many teams start with an AI draft, then layer in interviews, data, and brand voice. These hybrids are common in marketing. They can meet quality standards if they show clear expertise. Detectors may label them inconsistently. That is why editors still need a checklist that focuses on depth and usefulness rather than origin.

Legal and ethical considerations

Policies are evolving. Some industries expect disclosure. Others focus on outcomes such as accuracy and user safety. When you publish buyer guides or medical explainers, document your sources, add bylines, and include the name of the person who reviewed the draft. If readers ask if this was written by AI, you can answer with clarity about your process.

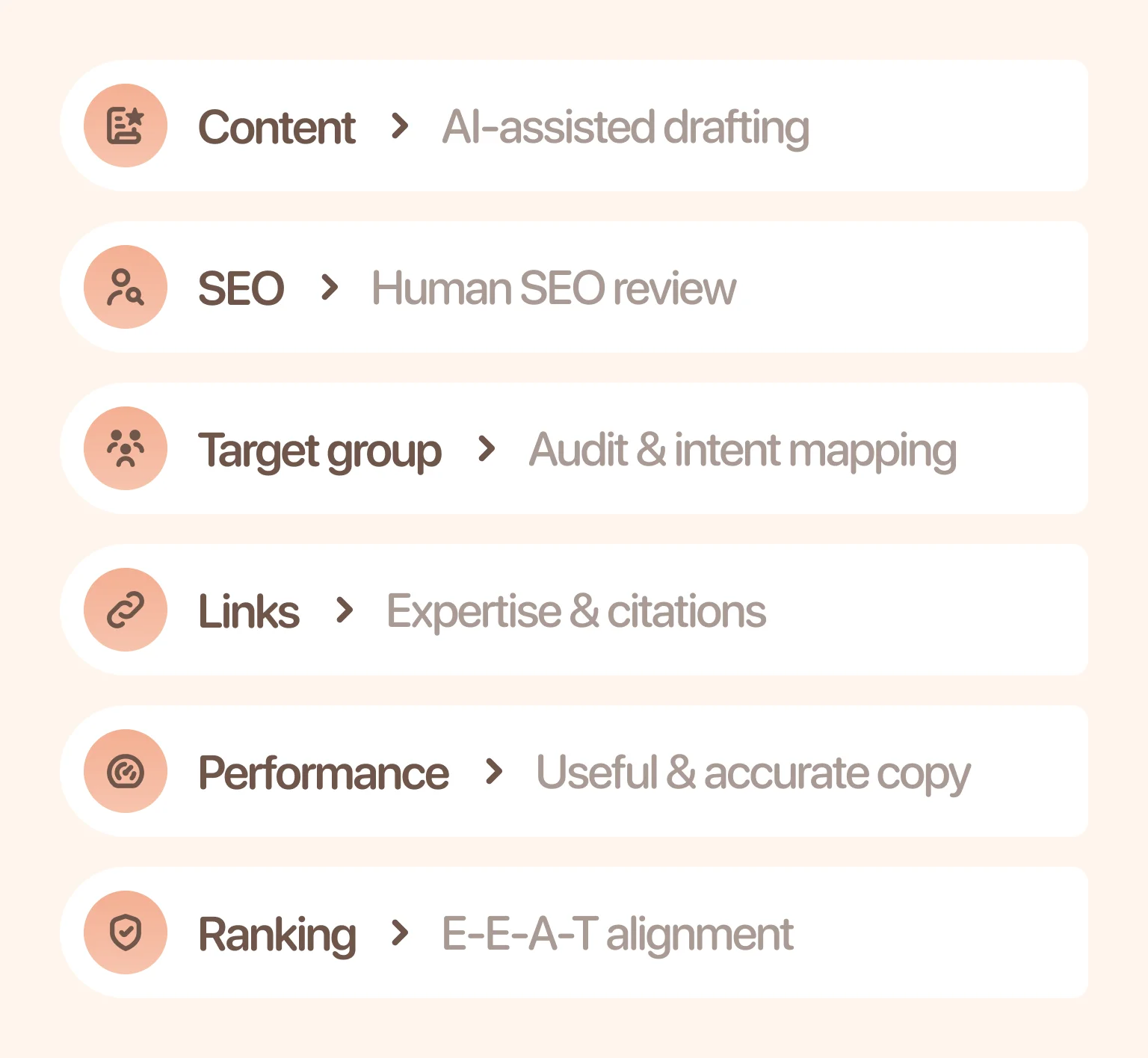

Ethical and strategic use of AI content in SEO

AI can be part of a responsible workflow. It drafts faster, surfaces angles you might miss, and helps outline complex topics. However, you must keep humans in charge. Use AI for speed, then add expertise, examples, and original visuals. If stakeholders ask whether AI-generated content is good for SEO, the honest answer is yes, provided the copy is useful, accurate, and reviewed.

If you need a structured way to plan topics, map intent, and audit gaps, see how our team approaches SEO copywriting. This service focuses on research-driven outlines, human editing, and on-page details that support rankings without sacrificing clarity.

Best practices for making AI-generated content SEO-friendly

You can ship faster with AI and still protect rankings. The following practices keep quality high and align with Google’s helpful content guidance. Use them as a playbook whenever you check for AI writing in your pipeline.

- Start with a clear intent. Tie each article to a search intent and a reader’s job to be done. List the top questions to answer. Also, confirm which sections require firsthand knowledge.

- Outline before drafting. Create a detailed outline that covers definitions, context, steps, examples, and FAQs — flags where you will add original data or quotes.

- Draft with AI, edit like a pro. Generate sections in small chunks. Rewrite for accuracy and voice. Add your unique insights and replace vague claims with specific sources or personal experiences.

- Insert expert input. Interview a subject specialist or pull notes from your own tests. Add names, roles, and quotes.

- Fact check and cite. Verify names, dates, and statistics and link to reputable sources. Also, always use the correct brand and product details.

- Tighten for clarity. Remove filler phrases, vary rhythm naturally, and add concrete examples and steps.

- Measure and refine. Track session duration, scroll depth, and conversions. Moreover, update or retire weak and poorly performing content.

Future of AI content and search engines

Search features are changing fast. Summaries in results pages, richer snippets, and follow-up prompts influence what users click. In this scenario, sites that publish content with verifiable detail, unique insights, and strong UX will overtake those that don’t. Teams will keep asking how to identify AI-generated text, yet the bigger question will be how to prove expertise. Expect more focus on:

- Author profiles and reviewer credentials.

- Original visuals, demonstrations, and data.

- Transparent sourcing and update logs.

As AI models improve, detectors will adapt. Editorial standards will matter even more. Teams that mix smart automation with strong judgment will outperform those who chase volume.

Final thoughts

AI detection is useful, but it’s not a silver bullet. The safest path is to blend automated speed with human judgment, then measure real outcomes. If you ever wonder how to check if something is written by AI, start with tools, compare against your checklist, and look for the human signals readers value. Quality is what earns rankings and trust over time.

in your mind?

Let’s communicate.

Frequently Asked Questions

Is AI-generated content bad for SEO?

AI text is not bad by default. Problems arise when articles feel thin, generic, or inaccurate. If your team reviews drafts, adds firsthand knowledge, and cites sources, AI can support SEO. This approach answers the question of whether AI is generated by a process rather than a guess.

Can Google really detect AI-written content?

Google evaluates usefulness and reliability. It can spot patterns linked to spam or scaled pages with little value. It does not punish content only because a model helped. Human editing and clear expertise reduce risk if someone asks how to identify AI-generated text on your site.

What happens if my site is flagged for AI content?

Sites that publish low-value pages at scale can face ranking drops or deindexing for those URLs. Review intent, improve depth, and consolidate overlapping posts. You should also use detectors to triage. Then assign editors to rebuild pages that deserve to rank. These steps matter more than a simple 'did AI write this?' check.

Are AI content detectors 100% reliable?

No. They provide likelihood scores, not verdicts. Use them alongside human review. Rely on depth, clarity, citations, and user signals. A single score should not decide publication.

How do I make AI-generated content more human-like?

Add examples from your own tests. Quote staff experts. Show screenshots and step lists. Rewrite generic lines. Vary structure and pacing naturally. These practices help both readers and algorithms. They also answer how to tell if something is written by AI from a reader’s point of view.

Can I rank on Google using AI-generated articles?

Yes, if the copy is accurate, thorough, and helpful. Use AI to speed up drafting and provide structure, then rely on people for insight and accountability. Monitor performance consistently, and refine sections that underperform. When you need more than a basic “AI or not” scan, run a full on-page review and track results to guide improvements.

Will AI-written content be penalized in the future?

Policies will continue to evolve, but the direction is clear: search systems reward usefulness and trust. Content that reflects real experience, cites sources clearly, and offers a strong user experience will remain adaptable. Relying on volume without value, however, only increases risk. The key is a quality plan, regardless of who wrote the first draft.